❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

❅

AI has come a long way in enabling businesses to increase the business operational efficiency and provide top-notch services to their customers. Now organizations have realized that to further increase productivity, they need to leverage the customizability of AI, especially in the Large Language Model.

LLMs have been in the market, and businesses know their importance and the value it provides. Organizations want to take a step further and customize the LLMs according to their business which would cater to their stakeholders efficiently. We have quite a few LLMs now in 2024, like Open AI's GPT4.0, Meta’s LLaMA, Google's LaMDA, Alpaca, Claude, PaLM, and many more.

In this blog, we will dive deep into fine-tuning Meta’s LLaMA 3.1, released in 2024, which offers both power and efficiency in a compact size. Fine-tuning LLaMA 3.1 can enable you to tailor the model to specific tasks, unlocking new levels of performance and customization.

This blog will guide you on the following points:

- Introducing Llama 3.1

- Why fine-tune Llama 3.1?

- What are the prerequisites and techniques to fine-tune Llama 3.1

- Step-by-step process of fine-tuning process for Llama 3.1

What is LLaMA 3.1?

Llama 3.1 models have pre-trained model collection, trained and instruction-tuned in sizes of 8B, 70B, and 405B parameters. These models support long context lengths of up to 128k tokens and they are optimized for multilingual dialogue use cases. Llama 3.1 is trained on the data up to 2024, allowing it to provide information on recent situations as well. Llama 3.1 allows the development of more advanced AI models and AI agents.

Why Fine-Tune LLaMA 3.1?

What is fine tuning? Fine-tuning an LLM allows you to customize the existing model to behave according to your particular use case or domain, without developing an LLM from scratch. Llama 3.1 can be fine-tuned to enhance a customer support chatbot, create domain-specific content generators, or develop a sophisticated AI agent. Fine-tuning improves the model's performance on specific tasks and mitigates biases by training it on a specialized dataset, enabling it to understand and respond to nuanced queries more effectively. Fine-tuning an LLM using labeled data is called the supervised fine-tuning llm technique, we will look at how it is done further in the blog.

Prerequisites for Fine-Tuning

Before you begin, ensure that the following prerequisites are met:

- Computational Resources: LLaMA 3.1, while efficient, requires substantial computational power, particularly GPUs with high VRAM or TPUs, especially for larger datasets.

- Pre-trained LLaMA 3.1 Model: Obtain the latest Llama 3.1 model from Meta’s open-source repository.

- Dataset: Preparing a dataset relevant to your specific task is crucial. It should be formatted appropriately, with input-output pairs to match the task you’re fine-tuning for.

- Deep Learning Frameworks: Familiarity with frameworks like PyTorch and the Hugging Face Transformers library, which supports Llama 3.1, is necessary.

Supervised Fine-tuning (SFT) Techniques

As discussed, if the training is done using a labeled dataset, it is called supervised fine-tuning and there are three most popular SFT techniques: (1.) Full Fine-tuning, (2.) Low-Rank Adaptation (LoRA), and (3.) Quantization-aware Low-Rank Adaptation (QLoRA).

1. Full fine-tuning:

It is the most straightforward SFT technique. It involves retraining all parameters of a pre-trained model on an instruction dataset. This method often provides the best results, however, it requires significant computational resources with several high-end GPUs to fine-tune an 8B model. Because it modifies the entire model, it is considered the most destructive method and may lead to the catastrophic forgetting of previous skills and knowledge.

2. Low-Rank Adaptation (LoRA):

It is a popular parameter-efficient fine-tuning technique. Instead of retraining the entire model, it freezes the weights and introduces small adapters (low-rank matrices) at each targeted layer. This allows LoRA to train a number of parameters that are drastically lower than full fine-tuning (less than 1%), reducing both memory usage and training time. This method is non-destructive since the original parameters are frozen, and adapters can then be switched or combined at will.

3. Quantization-aware Low-Rank Adaptation (QLoRA):

It is an extension of LoRA that offers even greater memory savings. It provides up to 33% additional memory reduction compared to standard LoRA, making it particularly useful when GPU memory is constrained. This increased efficiency comes at the cost of longer training times, with QLoRA typically taking about 39% more time to train than regular LoRA.

While QLoRA requires more training time, its substantial memory savings can make it the only viable option in scenarios where GPU memory is limited. For this reason, this is the technique we will use in the next section to fine-tune a Llama 3.1 8B model on Google Colab.

Step-by-step Process to Fine-Tune LLaMA 3.1

Step 1: Setting Up Your Environment Install the necessary libraries

!pip install transformers xformers

# Installs Unsloth, Xformers (Flash Attention) and all other packages!

!pip install "unsloth[colab-new] @ git+https://github.com/unslothai/unsloth.git"

# We have to check which Torch version for Xformers (2.3 -undefined 0.0.27)

from torch import __version__; from packaging.version import Version as V

xformers = "xformers==0.0.27" if V(__version__) undefined V("2.4.0") else "xformers"

!pip install --no-deps {xformers} trl peft accelerate bitsandbytes triton

Step 2: Loading the Model

Load the LLaMA 3.1 model and tokenizer

from unsloth import FastLanguageModel

import torch

max_seq_length = 2048 # Choose any! We auto support RoPE Scaling internally!

dtype = None # None for auto detection. Float16 for Tesla T4, V100, Bfloat16 for Ampere+

load_in_4bit = True # Use 4bit quantization to reduce memory usage. Can be False.

# 4bit pre quantized models we support for 4x faster downloading + no OOMs.

fourbit_models = [

"unsloth/Meta-Llama-3.1-8B-bnb-4bit", # Llama-3.1 15 trillion tokens model 2x faster!

"unsloth/Meta-Llama-3.1-8B-Instruct-bnb-4bit",

"unsloth/Meta-Llama-3.1-70B-bnb-4bit",

"unsloth/Meta-Llama-3.1-405B-bnb-4bit", # We also uploaded 4bit for 405b!

"unsloth/Mistral-Nemo-Base-2407-bnb-4bit", # New Mistral 12b 2x faster!

"unsloth/Mistral-Nemo-Instruct-2407-bnb-4bit",

"unsloth/mistral-7b-v0.3-bnb-4bit", # Mistral v3 2x faster!

"unsloth/mistral-7b-instruct-v0.3-bnb-4bit",

"unsloth/Phi-3.5-mini-instruct", # Phi-3.5 2x faster!

"unsloth/Phi-3-medium-4k-instruct",

"unsloth/gemma-2-9b-bnb-4bit",

"unsloth/gemma-2-27b-bnb-4bit", # Gemma 2x faster!

] # More models at https://huggingface.co/unsloth

model, tokenizer = FastLanguageModel.from_pretrained(

model_name = "unsloth/Meta-Llama-3.1-8B",

max_seq_length = max_seq_length,

dtype = dtype,

load_in_4bit = load_in_4bit,

# token = "hf_...", # use one if using gated models like meta-llama/Llama-2-7b-hf

)

Now that our model is loaded in 4-bit precision, we want to prepare it for parameter-efficient fine-tuning with LoRA adapters. LoRA has three important parameters:

- Rank (r): Rank determines LoRA matrix size. Rank typically starts at 8 but can go up to 256. Higher ranks can store more information but increase the computational and memory cost of LoRA. We set it to 16 here.

- Alpha (α): It is a scaling factor for updates. Alpha directly impacts the adapters' contribution and is often set to 1 or 2 times the rank value.

- Target modules: LoRA can be applied to various model components, including attention mechanisms (Q, K, V matrices), output projections, feed-forward blocks, and linear output layers. However, adapting more modules increases the number of trainable parameters and memory needs.

Here, we set r=16, α=16, and target every linear module to maximize quality. We don't use dropout and biases for faster training.

model = FastLanguageModel.get_peft_model(

model,

r = 16, # Choose any number undefined 0 ! Suggested 8, 16, 32, 64, 128

target_modules = ["q_proj", "k_proj", "v_proj", "o_proj",

"gate_proj", "up_proj", "down_proj",],

lora_alpha = 16,

lora_dropout = 0, # Supports any, but = 0 is optimized

bias = "none", # Supports any, but = "none" is optimized

# [NEW] "unsloth" uses 30% less VRAM, fits 2x larger batch sizes!

use_gradient_checkpointing = "unsloth", # True or "unsloth" for very long context

random_state = 3407,

use_rslora = False, # We support rank stabilized LoRA

loftq_config = None, # And LoftQ

)

Step 3: Preparing the Dataset

We now use the Alpaca dataset from yahma, which is a filtered version of 52K of the original Alpaca dataset. You can replace this code section with your own data prep.

- To train only on completions (ignoring the user's input) read TRL's docs.

- Remember to add the EOS_TOKEN to the tokenized output!! Otherwise, you'll get infinite generations!

alpaca_prompt = """Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.

### Instruction:

{}

### Input:

{}

### Response:

{}"""

EOS_TOKEN = tokenizer.eos_token # Must add EOS_TOKEN

def formatting_prompts_func(examples):

instructions = examples["instruction"]

inputs = examples["input"]

outputs = examples["output"]

texts = []

for instruction, input, output in zip(instructions, inputs, outputs):

# Must add EOS_TOKEN, otherwise your generation will go on forever!

text = alpaca_prompt.format(instruction, input, output) + EOS_TOKEN

texts.append(text)

return { "text" : texts, }

pass

from datasets import load_dataset

dataset = load_dataset("yahma/alpaca-cleaned," split = "train")

dataset = dataset.map(formatting_prompts_func, batched = True,)

Step 4: Supervised Fine-Tuning Process

Now let's use Huggingface TRL's SFTTrainer! We do 60 steps to speed things up, but you can set num_train_epochs=1 for a full run, and turn off max_steps=None. We also support TRL's DPOTrainer!

We're now ready to specify the training parameters for our run. The most important hyperparameters you need to know:

- Learning rate: It controls how the model updates its parameters. Too low or too high would make the training get stuck or unstable, which degrades performance.

- LR scheduler: It adjusts the learning rate (LR) during training, starting with a higher LR for initial rapid convergence and then decreasing it for careful convergence.

- Batch size: It is the number of training examples used in one iteration of training. Larger batch sizes - more stable undefined improved training speed - more memory.

- Num epochs: The number of complete passes of learning algorithm through the training dataset. More epochs allow better performance, however, too many epochs can cause overfitting.

- Optimizer: It is an algorithm used to adjust the parameters of a model to minimize the loss function and improve accuracy.

- Weight decay: A regularization technique that adds a penalty for large weights to the loss function. It helps prevent overfitting, however, too much weight decay can impede learning.

- Warmup steps: A period at the beginning of training where the learning rate is gradually increased from a small value to the initial learning rate.

- Packing: Batches have a pre-defined sequence length. So to increase efficiency, we can combine multiple small samples in one batch.

from trl import SFTTrainer

from transformers import TrainingArguments

from unsloth import is_bfloat16_supported

trainer = SFTTrainer(

model = model,

tokenizer = tokenizer,

train_dataset = dataset,

dataset_text_field = "text",

max_seq_length = max_seq_length,

dataset_num_proc = 2,

packing = False, # Can make training 5x faster for short sequences.

args = TrainingArguments(

per_device_train_batch_size = 2,

gradient_accumulation_steps = 4,

warmup_steps = 5,

# num_train_epochs = 1, # Set this for 1 full training run.

max_steps = 60,

learning_rate = 2e-4,

fp16 = not is_bfloat16_supported(),

bf16 = is_bfloat16_supported(),

logging_steps = 1,

optim = "adamw_8bit",

weight_decay = 0.01,

lr_scheduler_type = "linear",

seed = 3407,

output_dir = "outputs",

),

)Show current memory stats

# @title Show current memory stats

gpu_stats = torch.cuda.get_device_properties(0)

start_gpu_memory = round(torch.cuda.max_memory_reserved() / 1024 / 1024 / 1024, 3)

max_memory = round(gpu_stats.total_memory / 1024 / 1024 / 1024, 3)

print(f"GPU = {gpu_stats.name}. Max memory = {max_memory} GB.")

print(f"{start_gpu_memory} GB of memory reserved.")

Step 5: Evaluation

trainer_stats = trainer.train()

Show final memory and time stats

#@title Show final memory and time stats

used_memory = round(torch.cuda.max_memory_reserved() / 1024 / 1024 / 1024, 3)

used_memory_for_lora = round(used_memory - start_gpu_memory, 3)

used_percentage = round(used_memory /max_memory*100, 3)

lora_percentage = round(used_memory_for_lora/max_memory*100, 3)

print(f"{trainer_stats.metrics['train_runtime']} seconds used for training.")

print(f"{round(trainer_stats.metrics['train_runtime']/60, 2)} minutes used for training.")

print(f"Peak reserved memory = {used_memory} GB.")

print(f"Peak reserved memory for training = {used_memory_for_lora} GB.")

print(f"Peak reserved memory % of max memory = {used_percentage} %.")

print(f"Peak reserved memory for training % of max memory = {lora_percentage} %.")

Step 6: Inference

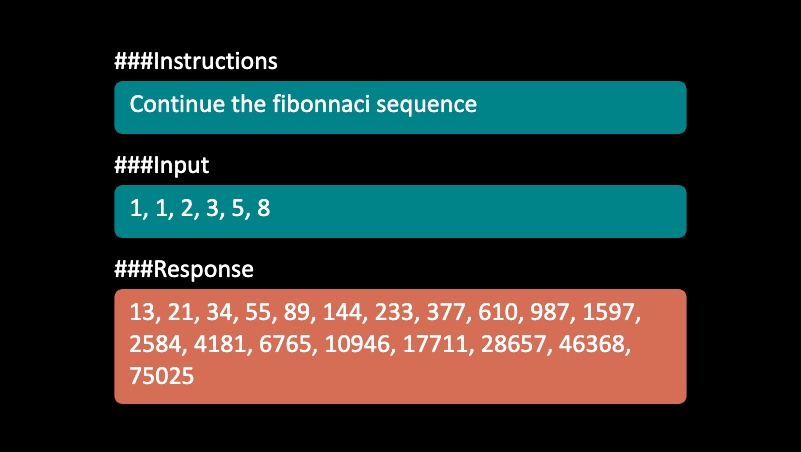

Let's run the model! You can change the instruction and input - leave the output blank! We use FastLanguageModel.for_inference() to get 2 times faster inference.

alpaca_prompt = Copied from above

FastLanguageModel.for_inference(model) # Enable native 2x faster inference

inputs = tokenizer(

[

alpaca_prompt.format(

"Continue the fibonnaci sequence.", # instruction

"1, 1, 2, 3, 5, 8", # input

"", # output - leave this blank for generation!

)

], return_tensors = "pt").to("cuda")

outputs = model.generate(**inputs, max_new_tokens = 64, use_cache = True)

tokenizer.batch_decode(outputs)

Use a TextStreamer for continuous inference - so you can see the generation token by token, instead of waiting the whole time!

# alpaca_prompt = Copied from above

FastLanguageModel.for_inference(model) # Enable native 2x faster inference

inputs = tokenizer(

[

alpaca_prompt.format(

"Continue the fibonnaci sequence.", # instruction

"1, 1, 2, 3, 5, 8", # input

"", # output - leave this blank for generation!

)

], return_tensors = "pt").to("cuda")

from transformers import TextStreamer

text_streamer = TextStreamer(tokenizer)

_ = model.generate(**inputs, streamer = text_streamer, max_new_tokens = 128)

Output:

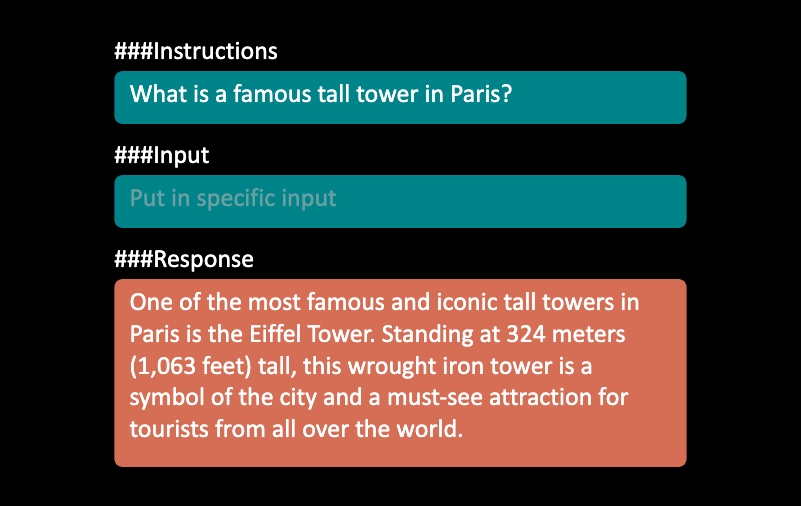

Step 7: Saving, loading finetuned models

To save the final model as LoRA adapters, either use Huggingface's push_to_hub for an online save or save_pretrained for a local save.

This ONLY saves the LoRA adapters, and not the full model.

model.save_pretrained("lora_model") # Local saving

tokenizer.save_pretrained("lora_model")

# model.push_to_hub("your_name/lora_model", token = "...") # Online saving

# tokenizer.push_to_hub("your_name/lora_model", token = "...") # Online saving

Now if you want to load the LoRA adapters we just saved for inference, set False to True:

if False:

from unsloth import FastLanguageModel

model, tokenizer = FastLanguageModel.from_pretrained(

model_name = "lora_model", # YOUR MODEL YOU USED FOR TRAINING

max_seq_length = max_seq_length,

dtype = dtype,

load_in_4bit = load_in_4bit,

)

FastLanguageModel.for_inference(model) # Enable native 2x faster inference

# alpaca_prompt = You MUST copy from above!

inputs = tokenizer(

[

alpaca_prompt.format(

"What is a famous tall tower in Paris?", # instruction

"", # input

"", # output - leave this blank for generation!

)

], return_tensors = "pt").to("cuda")

from transformers import TextStreamer

text_streamer = TextStreamer(tokenizer)

_ = model.generate(**inputs, streamer = text_streamer, max_new_tokens = 128)

Output:

End Note

Llama 3.1 offers a powerful yet efficient platform for fine-tuning, making it an excellent choice for a wide range of AI applications. By following the steps and best practices outlined in this guide, you can unlock the full potential of Llama 3.1, tailoring it to meet the specific needs of your project. Whether you’re working in research, industry, or creative AI applications, Llama 3.1 provides the tools you need to succeed.

As AI continues to evolve, staying updated with the latest models like Llama 3.1 and mastering fine-tuning techniques will be key to developing cutting-edge AI solutions.

We, at Seaflux, are AI undefined Machine Learning enthusiasts, who are helping enterprises worldwide. Have a query or want to discuss AI projects where LiteLLM can be leveraged? Schedule a meeting with us here, we'll be happy to talk to you.

Minali Jain

Software Engineer