Introduction

Small and Medium Enterprises (SMEs) are often required to deal with sensitive data, that needs to be handled with the utmost security and privacy. With AI and data analytics advancements, these cutting-edge technologies are being leveraged by organizations to get their data to talk to them and make informed decisions. However, sometimes organizations need to adhere to security compliance, like HIPPAA, where they are not allowed to store the data at a different location or have it on-prem rather than on the cloud. This leads to inaccessible LLM models that are cloud-based which can be highly inefficient for the organization.

Fortunately, there is a solution: Implementing LLM on your local system/server that can be leveraged for content generation and decision-making in your organization.

In this blog, we will dive into the benefits of LLM, the challenges in implementing LLM locally, and a guidebook for implementing LLM on your machine.

Benefits of LLM Running On Local System

Let's now get into the pros of running LLM locally.

1. Better Control: Hardware, training data, software, and more are under your control and can be changed according to your requirements. You have the liberty to comply with any regulations that may fall under your criteria to provide a better overall service.

2. Lower Operational Costs: Once set up and running, LLM is quite affordable compared to paying the cloud costs. You just have to maintain your local machine.

3. Reduced Latency: With the elimination of the network system, the response time between the prompt and the response is reduced.

4. Offline Accessibility: You do not require an active internet connection throughout to access the LLM, providing flexibility and independence. An internal network system can be made to provide access to the required system.

5. Privacy Matters: Running LLM locally allows you to have control over your data and model, which will reduce the chances of data breaches. Furthermore, the dependency on third-party services is reduced, resulting in a more secure system.

Challenges of Running LLM Locally

As we saw the benefits, now let's go through the challenges that you need to tackle in running the LLM on the local system

1. Higher Upfront Cost: Setting up the software and servers or getting higher-end hardware (GPUs for the system) can be a bit costly.

2. Complexity: Running LLM locally can be challenging, time-consuming, and requires technical expertise in case of bugs or maintenance. Both software and infrastructure need regular maintenance for optimum performance.

3. Lack of Scalability: On-demand scalability would be a task, as the storage and performance are limited and need special attention to upscale the system.

4. Manual Updates: Keeping the model up to date requires manual operation each time which can be time-consuming and prone to errors.

5. Energy Consumption: Running LLM requires higher computational power and it requires higher energy, ultimately leading to increased energy costs.

How to Run LLM Locally?

Let us first understand what we need to achieve in order to proceed with the LLM setup guide. You want to run a language model (like ChatGPT) on your machine. It should have the capability to perform tasks like generating text, answering questions, or even helping with customer service, all on your own computer. So, let us now go through the steps involved in LLM implementation in your local system and leveraging its power for your business.

Choose the Right Tool

- Smaller Models: If you're running on a CPU, choose smaller models that are more manageable, like GPT-2 or smaller variants of GPT-3, GPT-Neo, DistilGPT2, or similar models.

- Larger Models: If you’re using a high-end GPU, choose to run larger versions of GPT-3-like models such as GPT-NeoX, GPT-J, or you can even opt for some of the larger Hugging Face models.

Set Up Your Environment

- Hardware Requirements: Ensure you have a multi-core CPU with at least 8GB of RAM, though 16GB or more is recommended for better performance. Any GPU by Nvidia can be chosen with at least 4GB RAM to get optimum performance.

- Software Requirements: Python and necessary libraries. TensorFlow or PyTorch to support your GPU. Install Nvidia's CUDA Toolkit (parallel computing platform and programming model) and cuDNN (GPU-accelerated library for deep neural networks) to improve the performance and efficiency of the GPU.

- Create a Workspace: Your files and model's performance storage must not intertwine together or else it might alter the result. Create a workspace where all the model's work happens, so it doesn’t mix up with your other files.

Get the Model

As you have set up your environment, you can download the model, whether an OpenAI or a Hugging Face model for your customized LLM. You might require a little coding help here, for which you can seek technical expertise. You will also need to optimize the model according to your system capabilities at this stage for better and more efficient performance. You can use the mixed precision technique for a balanced performance or the distributed training technique if you have multiple GPUs for better performance.

Use the Model

Now the model is ready to be used. Optimize and deploy it according to your business requirements. If only the CPU is used, the LLM would take a little more time compared to the LLM running on GPU. With GPU, you can also increase the response rate even further by using lower-precision models (like FP16). It would use less memory, and run faster with little to no quality compromise. GPU would allow you to process larger batches for simultaneous responses which can be very beneficial for real-time LLM applications like chatbots or virtual assistants that need quick and simultaneous responses.

Using the LLM for Business

Now that you have a basic understanding of how to set up and run a language model (LLM) on your computer, let's explore some specific ways this can be useful for your business. Whether you're managing customer interactions, creating marketing content, or brainstorming new ideas, an LLM can be a powerful tool in your arsenal.

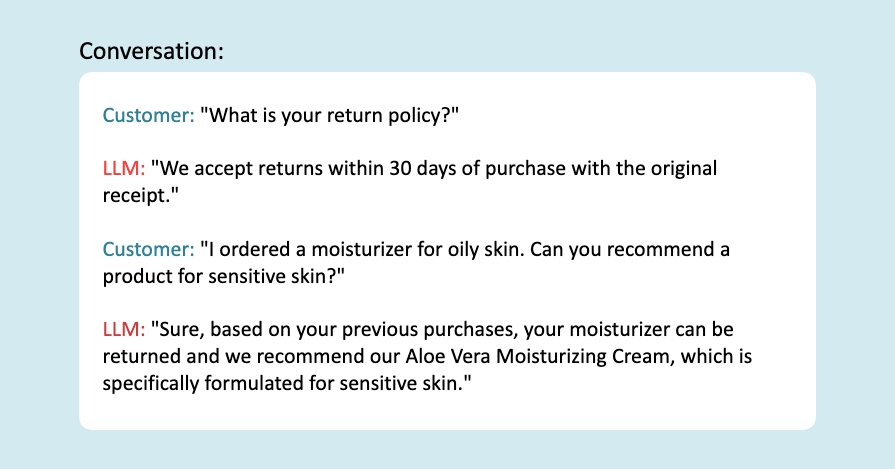

Automating Customer Service

Automating responses to your customer inquiries would be one of the most straightforward use cases. Use LLM for business automation and fine-tune the model with the frequently asked questions, your product data, customer website interactions, and more.

Example:

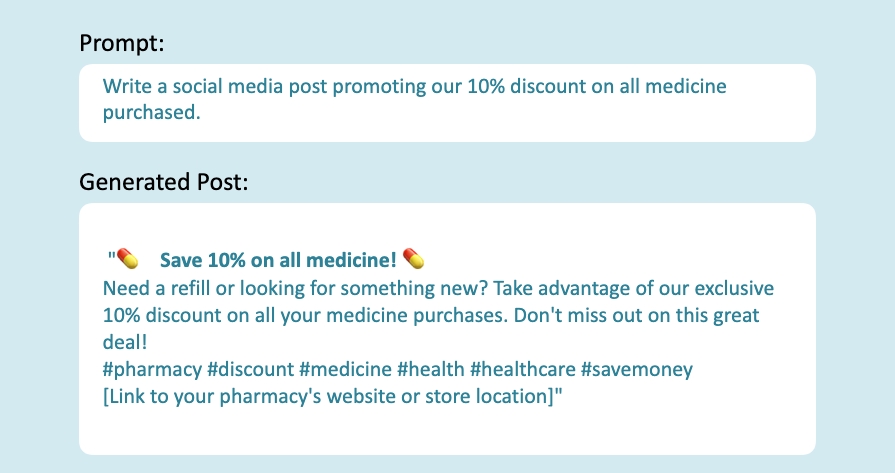

Creating Marketing Content

A small business has every member working in multiple departments to make business. LLM can reduce your workload by generating marketing content according to your business. Generate social media posts, blog articles, or email campaign content for your business.

Generating fresh and engaging content is a challenge for any small business. An LLM can be your creative assistant in this area.

Example:

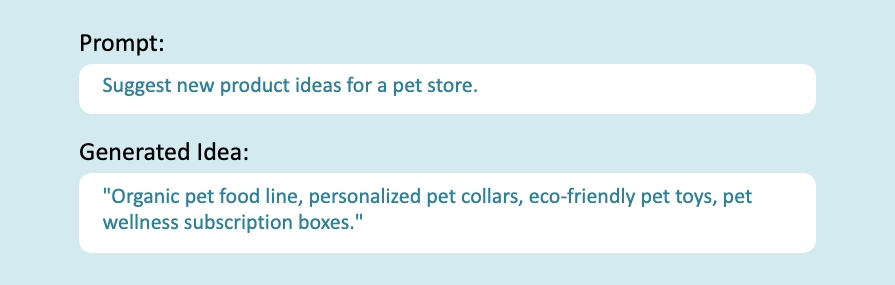

Brainstorming and Creative Writing

Sometimes you get a creative block in your artist side and you need a creative push. LLM can just do that and become your valuable brainstorming partner. You can use it to generate new product designs, taglines for your brand, or even an idea for your desired product.

Example:

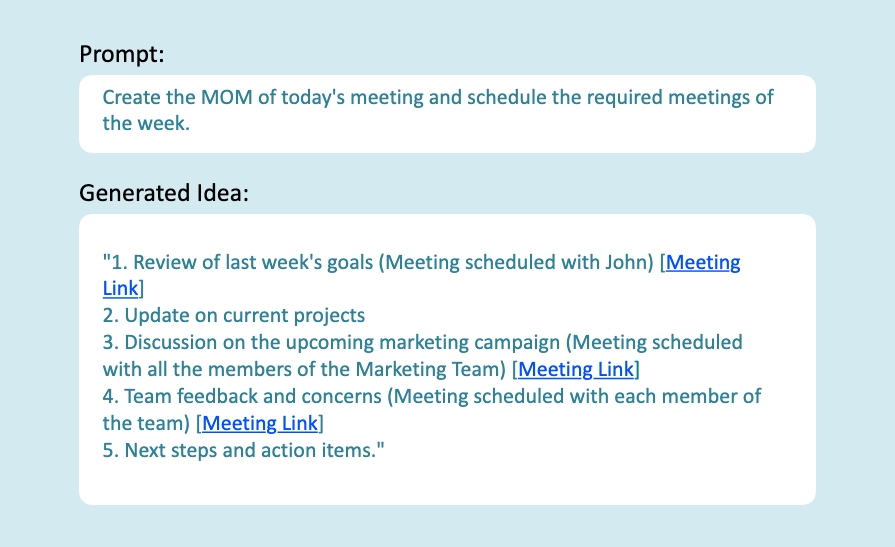

Enhancing Internal Operations

Apart from offering customer services, LLM can also help with the internal operational tasks of the business. Use the power of LLM to generate the quarterly summaries, meeting agendas, and MOMs undefined schedule tasks based on them.

Example:

End Note

With a bit of setup, you can use these models to help with your business tasks like customer service, marketing, and content creation, all from your own computer. If you have a GPU, use it to run the model at a much faster speed and handle more demanding tasks, like generating longer texts or using larger models. Integrate LLM with your business operations to save time, enhance creativity, and improve your customer satisfaction. Start small and get your way through all the operations to see which application gives you the best result.

We, at Seaflux, are AI undefined Machine Learning enthusiasts, who are helping enterprises worldwide. Have a query or want to discuss AI projects where NLTK or spaCy can be leveraged? Schedule a meeting with us here, we'll be happy to talk to you.

Aashutosh Mishra

Senior Marketing Executive